Fetch as Googlebot URL submission testing & review

08 October, 2011 by Tom Elliott

Google recently released an update to it’s “Fetch as GoogleBot” webmaster tool to allow websites or individual URLs to be submitted direct to its index, and it’s supposed to be rather fast. According to the official blog, new websites are crawled and indexed within 24 hours which would probably be faster than waiting for Googlebot to find your site if you have little or no links.

I managed to test Fetch as Googlebot out on two brand new websites, both of them fairly small 5-6 page sites on newly registered domains. Both sites had no inbound links, meaning there was no way Google could uncover the sites through the normal process of link crawling, which could have skewed the results. The first site I submitted was around 9am (GMT) in the morning and it was in Google’s index about 5 hours later, the second site submitted at 11.30am and only took 2 hours before being indexed.

Despite the tiny sample size, an average of 3.5 hours before getting listed in Google search results I don’t think is at all bad! From these limited results, there’s also a temptation to draw the conclusion that sites submitted through Fetch as Googlebot get crawled at a scheduled time between 1-2pm… I’ll be keen to test this with more sites though to rule out coincidence and I’ll post any new results as I find them. I would be interested to hear from others about their findings as well.

Google Fetch as Googlebot URL submission will be most useful to find new websites (or web pages) that have very little or no inbound links. Since the frequency Google crawls an existing site (or how quick it finds a new site) is largely dependent on how many links are pointing to it, then there’s little point using the submission tool if the site is already popular and has many existing inbound links.

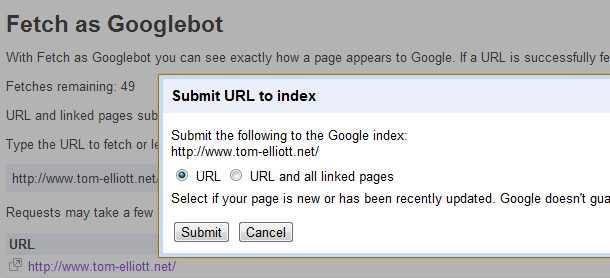

Using the submission tool itself is quick. Once logged into Google Webmaster Tool select ‘Fetch as Googlebot’ under the ‘Diagnostics’ drop down and ‘Fetch’. It should take a few seconds for the fetch to complete with a success status which will then allow you to ‘Submit to index’.

There is also an option to allow a website’s whole structure to be analysed. When I tested the 2 websites, I chose the regular ‘URL’ option but all pages ended up being indexed anyway. There is a limit however on the number of fetches – 50 weekly single URL fetches, and 10 monthly entire site fetches. It’s probably best to reserve these entire site requests for existing sites who’s structure has been altered with many new URLs.

Of course, you need a Google account to use Webmaster Tools in the first place. Happy indexing 🙂

2 Comments

nice

Thanks for the tip! I really enjoyed your post and found it pretty useful. I’ll be sure to test this out on my websites and let you know how things turned out.